The input and output data is stored in lmdb format.

http://caffe.berkeleyvision.org/tutorial/data.html

when you run mnist using lenet, you can save the log file using the following command

./examples/mnist/train_lenet.sh > mylog 2>&1

It is observed that, cudnn_conv_layer.cpp is used in convolution layer.

Solving LeNet starts from solver.cpp, and for every iteration it uses sgd_solver.cpp.

During training/testing, solver.cpp is used.

The Step() function is called, which use TestAll() function to check the performance.

in src/caffe/net.cpp, it uses ForwardFromTo(), to accumulate the loss using Forward() function.

net and layer use the shared_pointer.

like forward(), backward() is also switching between backward_cpu and backward_gpu.

forward_gpu() and backward_gpu() are all virtual functions.

Their specific implemantions are stored in .cu files, located src/caffe/layer/ folder.

in src/caffe/layer_factory.cpp, if using cudnn, the corresponding cudnn (conv, pooling, lrc ...) layer will be created.

When they process data, the blob (gpu) data is requested.

for convolution, the blobs for weight, bottom and top are needed.

src/caffe/synchedmem.cpp contains the gpu data transfer, sync or async.

For the forward algorithm in cudnn_conv_layer.cu,

=>leiming: FWD

I0211 23:02:12.374897 18363 cudnn_conv_layer.cu:23] =>leiming: blobs[0] 20 1 5 5 (500)

I0211 23:02:12.374899 18363 cudnn_conv_layer.cu:24] =>leiming: bottom size :1

I0211 23:02:12.374990 18363 cudnn_conv_layer.cu:30] =>leiming: bottom[0] size:100 1 28 28 (78400)

I0211 23:02:12.374997 18363 cudnn_conv_layer.cu:31] =>leiming: top[0] size:100 20 24 24 (1152000)

I0211 23:02:12.375000 18363 cudnn_conv_layer.cu:32] =>leiming: groups: 1

I0211 23:02:12.375037 18363 cudnn_conv_layer.cu:48] =>leiming: using bias term: 1

I0211 23:02:12.375319 18363 cudnn_conv_layer.cu:51] =>leiming: blobs[1] 20 (20)

I0211 23:02:12.375555 18363 cudnn_conv_layer.cu:22]

Which means that,

blobs, 20 number, 1 channel, width 5, height 5

Saturday, October 24, 2015

Sunday, October 18, 2015

MNIST + Caffe

(http://caffe.berkeleyvision.org/gathered/examples/mnist.html)

cd $CAFFE_ROOT

./data/mnist/get_mnist.sh

./examples/mnist/create_mnist.sh

layers are defined in lenet_train_test.prototxt under $CAFFE_ROOT/examples/mnist/

protocol buffers – a language-neutral, platform-neutral, extensible way of serializing structured data for use in communications protocols, data storage, and more.

It represents a serialized data structure, saved in .proto file. It is better than XML. (https://developers.google.com/protocol-buffers/docs/overview)

cd $CAFFE_ROOT

./data/mnist/get_mnist.sh

./examples/mnist/create_mnist.sh

Define MNIST Network

layers are defined in lenet_train_test.prototxt under $CAFFE_ROOT/examples/mnist/

protocol buffers – a language-neutral, platform-neutral, extensible way of serializing structured data for use in communications protocols, data storage, and more.

It represents a serialized data structure, saved in .proto file. It is better than XML. (https://developers.google.com/protocol-buffers/docs/overview)

- are simpler

- are 3 to 10 times smaller

- are 20 to 100 times faster

- are less ambiguous

- generate data access classes that are easier to use programmatically

The protobuf for Caffe is stored in $CAFFE_ROOT/src/caffe/proto/caffe.proto

A Blob is a wrapper over the actual data being processed and passed along by Caffe, and also under the hood provides synchronization capability between the CPU and the GPU. Mathematically, a blob is an N-dimensional array stored in a C-contiguous fashion.

lr_mults are the learning rate adjustments for the layer’s learnable parameters. In this case, we will set the weight learning rate to be the same as the learning rate given by the solver during runtime, and the bias learning rate to be twice as large as that - this usually leads to better convergence rates. (http://caffe.berkeleyvision.org/gathered/examples/mnist.html)

./examples/mnist/train_lenet.sh

~/caffe/tools/caffe.cpp

read the command line, calling ~/caffe/src/caffe/common.cpp

[step 2]

~/caffe/src/caffe/solver.cpp, initializing solver from parameters

[step 3]

solver creates a training net from net file: examples/mnist/lenet_train_test.prototxt

[step 4]

net.cpp, StateMeetsRule() function,

Caffe::root_solver(), Initializing net from parameters

[step 5]

In ~/caffe/include/caffe/layer_factory.hpp, CreateLayer() function

[step 6]

~/caffe/src/caffe/net.cpp

LOG(INFO) << layer_param->name() << " -> " << blob_name;

[step 7]

read lmdb

[step 8]

set up mnist

LOG_IF(INFO, Caffe::root_solver())

<< "Creating Layer " << layer_param.name();

[step 7]

time to create train and test networks :

21:32:45.919808 - 21:32:46.008837 Network initialization done.

less than 1 second

start optimization till the 10000 iterations

21:32:46.009042 - 21:33:24.866451 Optimization done.

39 seconds on GTX 760 using cuDNN

A Blob is a wrapper over the actual data being processed and passed along by Caffe, and also under the hood provides synchronization capability between the CPU and the GPU. Mathematically, a blob is an N-dimensional array stored in a C-contiguous fashion.

lr_mults are the learning rate adjustments for the layer’s learnable parameters. In this case, we will set the weight learning rate to be the same as the learning rate given by the solver during runtime, and the bias learning rate to be twice as large as that - this usually leads to better convergence rates. (http://caffe.berkeleyvision.org/gathered/examples/mnist.html)

MNIST Solver

$CAFFE_ROOT/examples/mnist/lenet_solver.prototxtTraining and Testing the Model

cd $CAFFE_ROOT./examples/mnist/train_lenet.sh

Data Flow In Details

[step 1]~/caffe/tools/caffe.cpp

read the command line, calling ~/caffe/src/caffe/common.cpp

[step 2]

~/caffe/src/caffe/solver.cpp, initializing solver from parameters

[step 3]

solver creates a training net from net file: examples/mnist/lenet_train_test.prototxt

[step 4]

net.cpp, StateMeetsRule() function,

Caffe::root_solver(), Initializing net from parameters

[step 5]

In ~/caffe/include/caffe/layer_factory.hpp, CreateLayer() function

[step 6]

~/caffe/src/caffe/net.cpp

LOG(INFO) << layer_param->name() << " -> " << blob_name;

[step 7]

read lmdb

[step 8]

set up mnist

LOG_IF(INFO, Caffe::root_solver())

<< "Creating Layer " << layer_param.name();

[step 7]

time to create train and test networks :

21:32:45.919808 - 21:32:46.008837 Network initialization done.

less than 1 second

start optimization till the 10000 iterations

21:32:46.009042 - 21:33:24.866451 Optimization done.

39 seconds on GTX 760 using cuDNN

Tutorials and Learning Examples

[1] http://radar.oreilly.com/2014/07/how-to-build-and-run-your-first-deep-learning-network.html

[2] http://corpocrat.com/2014/11/03/how-to-setup-caffe-to-run-deep-neural-network/

It is recommended to learn from recognizing the digits, in order to understand the framework.

[2] http://corpocrat.com/2014/11/03/how-to-setup-caffe-to-run-deep-neural-network/

It is recommended to learn from recognizing the digits, in order to understand the framework.

Sunday, October 4, 2015

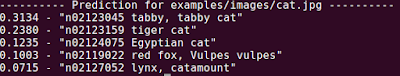

Examples_001_Classifying ImageNet: using the C++ API

A simple C++ code is

proposed in examples/cpp_classification/classification.cpp.

The

classification example will be built as

examples/classification.bin in

your build directory.

In my case,

caffe/build/examples/cpp_classification/

go to script folder,

run python script to download the model to bvlc_reference_caffenet

~/caffe/scripts$

./download_model_binary.py ../models/bvlc_reference_caffenet/

(

You may need to install python-yaml,

sudo apt-get install python-yamlsudo apt-get install libyaml-dev

)

got o

~/caffe/data/ilsvrc12

run

./get_ilsvrc_aux.sh , to obtain ImageNet labels file

classify the cat

image (examples/iamges/cat.jpg) using this command:

./build/examples/cpp_classification/classification.bin

\

models/bvlc_reference_caffenet/deploy.prototxt

\

models/bvlc_reference_caffenet/bvlc_reference_caffenet.caffemodel

\

data/ilsvrc12/imagenet_mean.binaryproto

\

data/ilsvrc12/synset_words.txt

\

examples/images/cat.jpg

Subscribe to:

Posts (Atom)